Imaging Bad Hard Drives (with a Synology NAS)

This is what I learned, trying to recover data from a bad drive. Most of this post should work for imaging a disk on a regular non-Synology Linux system.

Background

I’m currently trying to recover data from a 2009 iMac with a bad 250GB hard drive. The drive has many I/O errors reading it, and won’t boot into macOS. It mounted once, but then I foolishly tried to ‘repair’ the disk in Disk Utility.

My local mac repair shop was thankfully able to extract the hard drive from the iMac, by pulling off the screen (which is held in with magnets) using suction cups, undoing screws of rare shape, and fishing out the SATA drive.

They tried plugging in the drive to many other iMacs, but none of them would boot it. Most of the time the drive would spin up, then spin down. Sometimes it would come up as ‘Uninitialised’. I suppose my attempted filesystem repair + I/O write errors = corrupted file system. Dang.

So now I have a disk, and I want to get the data off it. Maybe even try to repair the filesystem again. But I don’t think I want to write to this disk again: too many I/O errors. I want to copy the disk to another computer.

Synology NAS

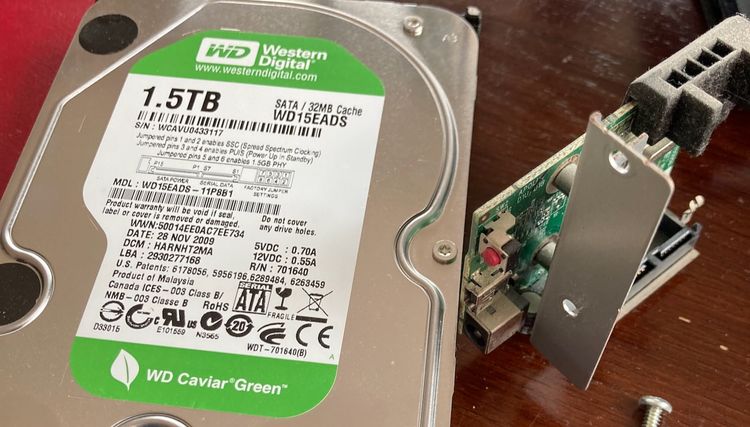

I have a Synology Network Attached Storage device at home. It’s been handy during these data recovery jobs. The DS418play model, 4-drive bay. I have three drives in it, and a spare slot. I’d like to recover the data from this machine, to a disk image file on the other disks.

Synology’s OS is Linux with a stripped-down set of userland binaries.

Data Recovery Tools

Usually I use cat /dev/sda > ~/sda.img to image a disk. Or pv /dev/sda > ~/sda.img if I’m feeling fancy and want a progress bar.

Unix has a culture of using dd, a funny tool with roots in other operating systems, with a funny command-line-flag convention differing from other Unix tools: usually people use dd if=/dev/sda of=~/sda.img, optionally setting a magic bs=xxx block size parameter, copy/pasted from the last time you did the job. Presumably, at one point, setting block size made a difference to the transfer speed? I’m fairly sure kernels today do the appropriate read-ahead though. I haven't seen differences in speed leaving it off.

cat will fail the first time they get an I/O error.

dd will fail by default, but it has a parameter conv=sync,noerror instructing to ignore read errors and output zeroes for that chunk instead.

These tools work, however, there’s a better tool for imaging near-death hard drives: ddrescue, which reads the drive, keeping track of which segments were read successfully and which need to be revisited in an auxiliary “map file”. You use ddrescue like: ddrescue /dev/sda ~/sda.img ~/sda.map.

ddrescue uses many strategies, including:

- retrying on errors

- reading the disk both forwards and backwards

- direct disk I/O (skipping the kernel block cache)

- and reducing the size of reads until they succeed.

ddrescue is also restartable, picking up state from its map file.

Installing ddrescue on Synology

ddrescue isn’t installed by default on Synology’s OS. But you can install it from the SynoCli Disk Tools Community Packaage. I downloaded the x86_64 variant for DSM 7.x for my NAS, because I have an Intel chip, and I found I was running version 7.x of the NAS software in Control Panel - Information Center.

I downloaded the package to my laptop, then installed the package from the Synology web UI.

Running ddrescue on Synology

I made a new ’Shared Folder’ where the data should go: imac.

Set up SSH access in the Synology UI. SSH in:

$ ssh admin@synology

Figure out which disk to image, by first looking to see which disks are available before you put the to-be-recovered disk in:

$ ls /dev/sd?

/dev/sdb /dev/sdc /dev/sdd

Insert the to-be-recovered disk, and see which disk is new:

$ ls /dev/sd?

/dev/sda /dev/sdb /dev/sdc /dev/sdd

For me, the new disk is /dev/sda.

Now let’s run ddrescue. A few prerequisites here:

- Disk access needs root access. So we need sudo.

- We want the command to keep running after the ssh connection closes (I don’t want the transfer to stop when my laptop disconnects). Usually I would start the command in tmux, but Synology doesn’t have tmux, so I am using the lower-level

nohupcommand. - For sudo and nohup to work together, you need to use

sudo -bto have the sudo’d command run in the background. If you instead try and use ampersand to background the command, the sudo password prompt will be backgrounded and sudo will hang.

$ cd /volume1/imac

$ sudo -b nohup ddrescue /dev/sda sda.img sda.map

Now you can disconnect your shell session, then re-ssh in, and check that it’s still running:

$ ps aux | grep ddrescue

You can follow the progress by tailing the nohup.out output file:

$ tail -f nohup.out

Advanced ddrescue flags

The imaging started off very slow. It read 500MB in the first day, and 1GB in the second day. For a 250GB hard drive, I’m going to be lucky if it finishes this year. This is probably due to all the I/O error retrying, though I'm not sure at which layer (disk? kernel? ddrescue?).

To speed it up, I am copy/pasting stackoverflow lore: I am playing with the flags -v and -vv for verbose (although this seems to have no effect that I can discern), and -idirect to skip the kernel block cache reading the input, which seems useful if the disk has bad sectors and the kernel might be reading larger chunks into cache? I'm not sure.

As a newbie, I found the ddrescue docs fairly daunting. I'll dive into them later if I need to.

Aside: Slow disks & Uninterruptible sleep

Sometimes ddrescue is very slow, reading a bad sector. I wanted to restart it to try some other flags. But sudo killall ddrescue doesn’t stop the process! What’s going on?

Looking at it’s callstack with shows that it's stuck in a read syscall (SyS_read), which is trying to do direct I/O on the disk (do_blkdev_direct_IO):

$ sudo cat /proc/$(pidof ddrescue)/stack

[<ffffffff811d3f18>] do_blockdev_direct_IO+0x2058/0x2ed0

[<ffffffff811d4dcf>] __blockdev_direct_IO+0x3f/0x50

[<ffffffff811cf8cb>] blkdev_direct_IO+0x4b/0x50

[<ffffffff81133348>] generic_file_read_iter+0xc8/0x690

[<ffffffff811cfc20>] blkdev_read_iter+0x30/0x40

[<ffffffff8118cea5>] new_sync_read+0x85/0xb0

[<ffffffff8118cef7>] __vfs_read+0x27/0x40

[<ffffffff8118e3cc>] vfs_read+0x7c/0x130

[<ffffffff8118fe4c>] SyS_read+0x5c/0xc0

[<ffffffff81579c4a>] entry_SYSCALL_64_fastpath+0x1e/0x8e

[<ffffffffffffffff>] 0xffffffffffffffff

And ps shows that the ddrescue process is in state D, or “uninterruptible sleep”. This means you can’t interrupt (kill, signal) the app!

$ ps aux | grep ddrescue

root 15419 0.0 0.1 13324 2668 ? D Mar04 0:02 ddrescue --no-scrap --no-trim --idirect -vv /dev/sda sda.img sda.map

This StackOverflow thread is full of people who have unkillable processes blocking on hardware I/O. And the only advice they are getting is to reboot the machine. Operating systems are supposed to allow you to multiplex processes over hardware and allow starting & stopping processes, but sometimes the kernel is programmed in a way where the process can’t be interrupted in the middle of talking to the disk, assuming that disk access will be fast.

But this is a bad disk, and it’s probably internally retrying the read request for what must be hundreds of times before returning. Or perhaps the kernel is trying a larger read to fill its block cache, and that’s taking a long time.

It's pretty frustrating to kill the program and have nothing happen. The only way I have found to get the read call to return and the program to be killed is to either:

- Yank out the drive, or

- Reboot the NAS

I wonder if other, non-Linux OSs do better here? Or do all OSs have a concept of uninterruptible sleep on disk I/O? You wouldn’t build a distributed system in this way. Assuming disks are fast to respond seems unfortunate.

Conclusion

You can image a drive in a Synology, even if the drive has bad sectors. Hope this helps!

Please let me know if you know any tricks to speed this up!

Comments ()